Artificial intelligence has entered a new era where systems don’t merely respond to commands—they actively plan, reason, and execute complex tasks independently. AI agents represent this fundamental transformation, embodying the next generation of intelligent software that can perceive environments, make autonomous decisions, and coordinate actions across multiple systems to achieve predefined goals.

Unlike traditional software applications that follow rigid, predetermined workflows, AI agents operate with genuine agency—the capacity to act independently and adapt to changing circumstances. This distinction marks a paradigm shift in how we conceive artificial intelligence, moving from static, reactive tools to dynamic, goal-oriented systems that mirror human problem-solving capabilities.

Agent loop diagram showing AI agent architecture with task planning, tool usage, working memory editing, external feedback, and response generation

What is an AI Agent?

An AI agent is a software system that can interact with its environment, collect and process data, and perform self-directed tasks to meet predetermined goals without constant human intervention. At its core, an AI agent follows the fundamental “sense → decide → act” loop: it perceives its environment through inputs, makes intelligent decisions based on reasoning, and executes actions to influence that environment.

The defining characteristic of AI agents is their autonomy. While traditional software executes pre-programmed instructions deterministically, AI agents employ sophisticated reasoning to interpret new situations and handle unforeseen scenarios without explicit reprogramming. An AI agent representing a customer service team member, for instance, can autonomously ask customers varied questions, look up information in internal documents, determine if it can resolve an issue independently, or identify when to escalate to a human representative.

Key Components of AI Agents

An effective AI agent architecture consists of several interconnected components:

- Foundation Model (LLM): The reasoning engine powered by large language models like GPT or Claude that interpret natural language, generate human-like responses, and reason over complex instructions

- Perception Module: Gathers and processes data from various sources including structured databases, unstructured text, sensors, and APIs

- Cognitive/Reasoning Layer: Analyzes information and makes decisions using the foundation model and decision-making algorithms

- Planning Module: Breaks down complex goals into manageable steps and sequences them logically using hierarchical task networks or algorithmic strategies

- Memory Systems: Stores information for recall and learning, including short-term memory for current interactions and long-term memory for historical knowledge

- Action Module: Executes decisions through APIs, automation scripts, or physical actuators

- Feedback Loop: Observes outcomes, learns from results, and continuously refines decision-making for improved performance

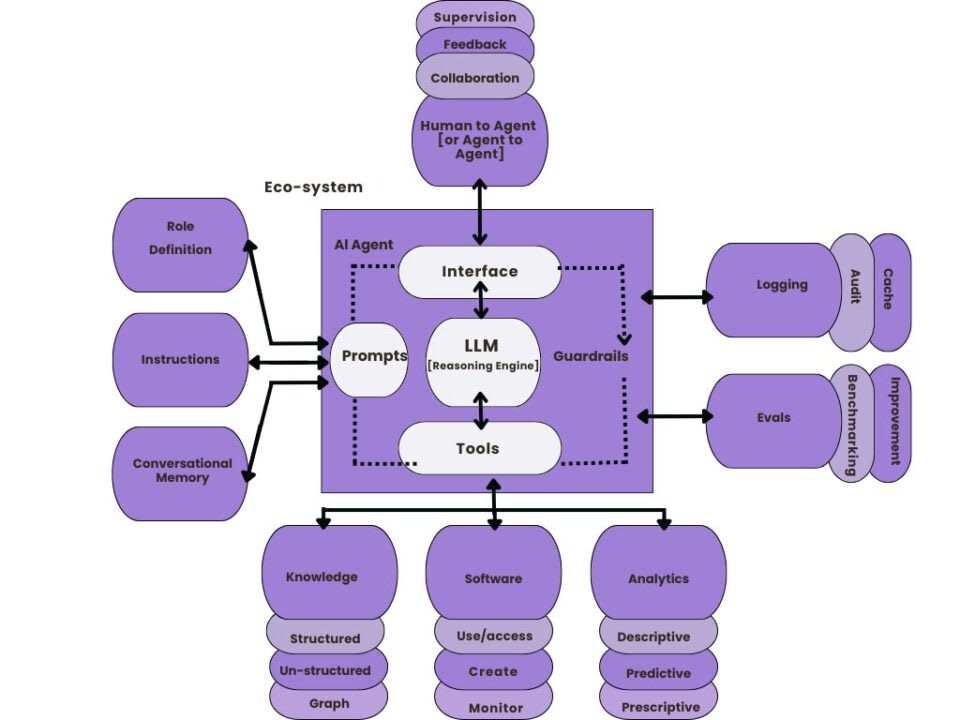

Diagram showing the anatomy and ecosystem of an AI agent, highlighting its core components and interactions

How AI Agents Work: The Operational Cycle

AI agents operate through a continuous iterative cycle that mirrors human problem-solving processes:

1. Perceive and Observe

The agent observes its environment, collecting data from multiple sources and identifying current goals and constraints. This perception layer extracts relevant information from raw data using techniques like natural language processing and computer vision.

2. Plan and Reason

Using its foundation model and planning module, the agent decomposes complex objectives into manageable subgoals. It considers available tools, past experience (from memory), and environmental constraints to formulate a logical sequence of actions.

3. Act and Execute

The agent executes planned actions through available tools and APIs. Whether updating a database, sending an email, or triggering a workflow, the action module translates decisions into concrete outcomes.

4. Reflect and Learn

After execution, the agent observes the results, comparing them against expectations. This reflection generates feedback that updates the agent’s knowledge base and informs future decisions, enabling continuous improvement through the feedback loop.

5. Adapt and Improve

Based on outcomes and feedback, the agent adjusts its strategies and decision-making parameters. This continuous learning transforms the agent into an increasingly capable system over time.

This cycle—observe, plan, act, reflect, adapt—enables AI agents to become “more efficient, more accurate, and more capable” without explicit retraining.

Diagram showing an AI agent’s perception, reasoning, and action cycle with feedback, illustrating its interaction with the external environment

Types of AI Agents

The field recognizes several distinct categories of AI agents, each with unique capabilities and applications:

Simple Reflex Agents

These agents react to current environmental inputs using predefined condition-action rules. They lack memory and complex reasoning capabilities, making them suitable for transparent, predictable environments.

Example: Thermostats responding to temperature thresholds or email auto-responders triggering based on specific keywords.

Model-Based Reflex Agents

Operating in partially observable environments, these agents maintain an internal model of the world, combining current sensory input with past knowledge to make informed decisions.

Example: Self-driving cars using sensor data combined with knowledge of road rules and vehicle dynamics.

Goal-Based Agents

These agents evaluate future outcomes and select actions that achieve specific goals, offering greater flexibility in multi-step tasks.

Example: Route planning applications optimizing travel paths or treatment planning systems in healthcare.

Utility-Based Agents

Evaluating potential actions based on expected utility or benefit, these agents excel in complex decision-making environments with multiple outcomes.

Example: Dynamic pricing systems adjusting prices in real-time, or financial trading systems maximizing returns while minimizing risks.

Learning Agents

Incorporating feedback and experience, learning agents continuously improve their performance through reinforcement learning techniques.

Example: Recommendation systems that adapt suggestions based on user behavior and preferences.

Tool-Using LLM Agents

Modern agents that plan actions and call external tools through functions, leveraging broad capabilities with minimal custom code.

Example: Booking systems, research agents, report generation, or code modification tools.

Embodied/Robotic Agents

Physical systems controlled by AI that interact directly with the real world.

Example: Manufacturing robots, surgical systems like da Vinci, or agricultural drones.

Comparison of AI Agents, Agentic AI, and Autonomous AI showing increasing levels of autonomy and functionality

AI Agents vs. AI Chatbots: Understanding the Distinction

While often conflated, AI agents and AI chatbots represent fundamentally different capabilities and design philosophies:

Core Differences in Operation

Chatbots are fundamentally conversational tools responding within predefined scopes. They excel at answering FAQs, checking order status, or guiding users through simple processes using pattern matching or basic natural language processing.

AI Agents operate more independently like digital employees. They interpret nuanced instructions, break complex problems into steps, execute actions across multiple systems, and improve through experience. A customer support agent can independently research issues, check inventory, update tickets, and escalate only when necessary—all without explicit human direction.

Example: A chatbot might respond “Your order is being processed,” while an agent would independently check warehouse status, identify delays, contact suppliers, reroute shipments, and notify you of the new delivery date.

Comparison chart highlighting key differences between AI agents and chatbots across various dimensions such as autonomy, decisions, context, integration, learning, scope, architecture, and value

AI Agent Architecture and Memory Systems

Modern AI agents employ sophisticated memory architectures that distinguish them from traditional systems:

AI agent system architecture showing core LLM linked to knowledge, long memory, tools, and instructions

Three Layers of AI Agent Memory

Short-Term Memory (STM)

Stores information relevant to current interactions. When you ask a chatbot about Paris and then ask “What’s its capital?”, the STM recalls that “it” refers to Paris. Without STM, every question requires re-evaluation of full context.

Long-Term Memory (LTM)

The agent’s permanent knowledge base storing information for extended recall. This enables agents to recognize patterns, personalize responses based on history, and accumulate knowledge over months or years.

Feedback Loops

Dynamic learning systems that incorporate both explicit user feedback (“This was helpful”) and implicit feedback (users rephrasing queries when responses were inadequate). These loops continuously refine the agent’s knowledge and response generation.

How Memory Enables Continuous Learning

An AI customer service agent initially providing generic return instructions will, through feedback loops, learn that users repeatedly search for specific step-by-step guidance. It adapts by prioritizing more detailed, actionable instructions based on successful past interactions.

This three-layer memory architecture transforms AI agents from stateless tools into long-term contributors that improve with time and experience.

AI agent system architecture showing core LLM linked to knowledge, long memory, tools, and instructions

Real-World Applications of AI Agents

AI agents are transforming operations across industries through autonomous decision-making and task execution:

Customer Service & Support

Autonomous agents handle inquiries, troubleshoot issues, and escalate complex problems. Unlike chatbots, they access multiple systems to provide comprehensive solutions and learn from customer feedback to improve responses.

Supply Chain & Logistics

Agents analyze sales data, inventory levels, supplier performance, and external factors to predict demand, optimize orders, and dynamically reroute shipments without human intervention.

Healthcare Administration

Scheduling appointments, processing insurance claims, managing patient records, and coordinating with specialists—agents automate administrative tasks while maintaining compliance and privacy standards.

Financial Services

Risk assessment, fraud detection, algorithmic trading, and investment recommendations based on market analysis. Utility-based agents evaluate risk-return tradeoffs and execute transactions autonomously.

Software Development

Code generation, debugging, testing, and documentation creation. Agents analyze requirements and incrementally develop solutions, learning from test failures to refine code.

Business Analytics

Processing large datasets to identify trends, generate actionable insights, and create customized reports. Agents access real-time data streams and synthesize information across domains.

Cybersecurity

Real-time threat detection, adaptive threat hunting, and automated incident response. Security agents identify suspicious patterns and take corrective action before human analysts intervene.

Key differences between AI agents and AI assistants covering autonomy, decision-making, complexity, and examples

Benefits and Advantages of AI Agents

Organizations implementing AI agents experience measurable advantages:

Enhanced Productivity & Cost Efficiency

Agents automate both routine and complex tasks, freeing human workers for higher-value activities. Companies report up to 90% reduction in operational costs for routine tasks and 45% increase in task automation efficiency.

Improved Decision-Making

Processing vast datasets faster than humans, agents identify patterns and make informed decisions using machine learning. They combine real-time data with historical knowledge for superior outcomes.

24/7 Continuous Operations

Unlike human workers, AI agents operate without breaks, increasing operational efficiency and responsiveness across all time zones and conditions.

Scalability Without Linear Growth

Agents scale horizontally without proportional staffing increases, allowing organizations to support more business use cases without expanding headcount.

Adaptive Context Awareness

Agents understand nuanced situations, learn from feedback, and modify approaches based on changing circumstances—adapting to each unique scenario rather than applying rigid rules.

Challenges and Limitations of AI Agents

Despite promising capabilities, AI agents face significant challenges:

Hallucinations and Reliability

AI agents frequently generate false or misleading information. With 95% accuracy on single steps, multi-step reasoning can degrade to ~60% accuracy after 10 steps due to compounding errors.

Memory and Context Limitations

Most agents struggle maintaining context across long conversations or complex multi-day tasks. Memory retention challenges limit effectiveness for extended workflows.

Complex Reasoning Deficits

Agents lack metacognitive abilities and struggle with truly novel situations requiring sophisticated reasoning beyond their training.

Integration Complexity

Connecting agents to legacy enterprise systems, outdated databases, and complex workflows requires substantial custom development.

Cost and Resource Requirements

Running sophisticated agents demands significant computational resources. API call costs, vector database storage, and infrastructure quickly escalate for high-volume applications.

Unpredictable Behavior

Non-deterministic nature of LLMs means identical inputs may produce different outputs, making agents unsuitable for mission-critical applications requiring consistency.

Security and Trust Issues

AI agents require well-defined operational boundaries, role-based access controls, and robust governance frameworks. The “double agent” problem means agents could be manipulated to cause harm if not properly secured.

Diagram showing the components and interactions in multi-agent planning including environment, agents, communication, and collaboration

AI Agent Frameworks and Development Tools

The development ecosystem for AI agents matured significantly in 2025:

Popular Frameworks

LangChain (55.6% adoption)

Dominates the agent development stack, acting as the glue between LLMs, vector databases, and external tools. Facilitates memory management and tool calling.

CrewAI (9.5% adoption)

Python framework for multi-agent systems with user-friendly APIs. Enables agents to collaborate with specialized roles, backstories, and task delegation.

Microsoft AutoGen (5.6% adoption)

Framework for building conversational multi-agent systems with scalable, distributed applications supporting real-time collaboration.

LlamaIndex (7.1% adoption)

Specializes in retrieval, giving agents structured access to enterprise knowledge bases and data sources.

Phidata

Multimodal framework enabling agents to process text, images, and audio data simultaneously with memory and tool support.

LLM Choices

OpenAI Models (73.6% of projects)

Remains the default choice for agent development, with broad capabilities and reliability.

Claude (16.6%)

Growing preference among enterprises valuing safety and alignment.

Other Models: Google Gemini (3.9%), Meta Llama (2.8%), and open-source alternatives continue gaining ground.

The Future of AI Agents: Trends and Predictions

2025-2026 Outlook

Multi-Agent Collaboration

No longer merely experimental, frameworks now enable production-ready multi-agent systems where specialized agents coordinate to solve complex problems.

Personalization & Contextual Intelligence

Agents will provide increasingly tailored experiences based on accumulated user history and preferences, adapting tone and suggestions dynamically.

Enhanced Interoperability

Greater integration between tools and platforms will enable seamless collaboration across diverse systems without custom development.

Democratized Development

Easier-to-use frameworks will make agentic AI accessible to non-specialists, accelerating adoption across industries.

Regulatory Frameworks

Governance structures and ethical guidelines for autonomous agent behavior are rapidly developing to ensure responsible deployment.

Market Projections

The global agentic AI tools market is experiencing explosive growth:

- Market Size in 2025: $10.41 billion

- Compound Annual Growth Rate (CAGR): ~56.1%

- Prediction by 2029: AI agents will autonomously resolve 80% of common customer service issues without human intervention

Emerging Considerations

Security and Identity Management

AI agents require verifiable identities and zero-trust security approaches to prevent manipulation and ensure accountability.

Relationship Ethics and Accountability

As humans develop long-term relationships with AI agents, frameworks ensuring accountability must be built into these systems.

Enterprise Readiness

While promising, organizations still need to build governance frameworks, compliance standards, and error recovery mechanisms before enterprise-wide deployment.

Implementation Best Practices

Organizations planning AI agent deployment should consider:

1. Clear Goal Definition

Establish well-defined objectives and success metrics before development. Ambiguous goals lead to unpredictable agent behavior.

2. Robust Testing and Validation

Implement comprehensive testing with real-world scenarios and edge cases. Test agents in controlled environments before production deployment.

3. Gradual Rollout Strategy

Begin with low-risk applications before expanding to critical systems. This approach allows refinement and builds organizational confidence.

4. Human-in-the-Loop Governance

Implement human oversight for high-impact decisions. Maintain approval workflows for critical actions and establish clear escalation paths.

5. Continuous Monitoring and Optimization

Establish observability dashboards tracking agent performance, error rates, and user satisfaction. Use metrics to continuously refine agent behavior.

6. Security and Compliance

Define operational boundaries with role-based access controls. Ensure agents operate within compliance requirements and maintain audit trails for accountability.

Conclusion

AI agents represent a fundamental evolution in artificial intelligence—moving from reactive, prompt-dependent systems to autonomous, goal-oriented entities capable of complex reasoning, planning, and execution. The distinction from traditional chatbots is profound: where chatbots follow scripts, agents think; where chatbots respond reactively, agents plan proactively; where chatbots remain static, agents continuously learn and adapt.

The rapid maturation of development frameworks, LLMs, and supporting infrastructure in 2025 has transitioned AI agents from experimental prototypes to production-ready solutions. Organizations across customer service, supply chain, healthcare, finance, and software development are realizing tangible benefits from autonomous agent deployment.

However, success requires more than technological capability. Organizations must address reliability challenges, build robust governance frameworks, establish trust through transparency and accountability, and ensure agents operate within well-defined boundaries that align with organizational values.

As we advance deeper into 2025 and beyond, AI agents will continue evolving toward increasingly sophisticated multi-agent systems, broader contextual understanding, and more accessible development tools. The organizations that strategically implement AI agents today—starting with well-scoped applications and gradually expanding—will be best positioned to harness their transformative potential in reshaping how work gets done.

The question is no longer whether AI agents will transform enterprises and workflows. The evidence is clear. The real question now is: How quickly will your organization adapt to leverage this technology before competitors do?

FAQs: Understanding AI Agents

1. What is the main difference between an AI agent and a chatbot?

AI agents are autonomous systems that independently plan, reason, and execute complex, multi-step tasks with minimal human input. They maintain memory across sessions, learn from interactions, and make independent decisions. Chatbots, by contrast, are reactive conversational tools that respond to user prompts within predefined scopes using scripted responses. While a chatbot answers “Your order status is pending,” an AI agent independently checks inventory, identifies delays, coordinates with suppliers, and proactively notifies you of updated delivery information.

2. How does an AI agent learn and improve over time?

AI agents learn through three mechanisms: short-term memory maintains context during current interactions, long-term memory stores accumulated knowledge and patterns, and feedback loops enable continuous learning. When users provide feedback or implicitly signal dissatisfaction (by rephrasing queries), agents capture these signals, generate generalized rules, and apply them to future decisions. This three-layer memory system transforms agents into long-term contributors that become increasingly effective with experience.

3. Can AI agents work together in multi-agent systems?

Yes, modern AI agents are designed for collaboration. Multi-agent systems involve specialized agents with distinct roles coordinating to solve complex problems impossible for single agents. Different agents might handle research, analysis, writing, and quality review—communicating findings and building on each other’s work. This collaborative approach improves resilience, enables specialization, and handles significantly more complex workflows than individual agents.

Diagram comparing single agent and multi agent AI systems showing task division and collaboration

4. What are the main security concerns with AI agents?

Key security concerns include: hallucinations (generating false information), unpredictability (identical inputs producing different outputs), integration vulnerabilities, and the “double agent” problem where agents could be manipulated to cause harm. Organizations must implement zero-trust security approaches, role-based access controls, comprehensive audit trails, approval workflows for critical actions, and clear accountability frameworks.

5. How do AI agents handle unexpected situations?

Modern AI agents employ reasoning modules and planning capabilities to assess novel situations. Rather than following rigid scripts, agents decompose unfamiliar problems into components, search memory for related past experiences, evaluate possible approaches, and select strategies most likely to succeed. This adaptive reasoning allows agents to handle ambiguity and scenarios outside their training—though reliability remains lower than for familiar tasks.

6. What industries benefit most from AI agents?

Industries with high-volume, complex workflows see the greatest benefits: customer service (handling diverse inquiries), supply chain management (dynamic optimization), healthcare (administrative automation and clinical support), financial services (trading and risk management), software development (code generation and testing), and cybersecurity (threat detection and response). Essentially, any domain with repetitive decision-making or complex multi-step processes benefits from autonomous agents.

7. How do I know if my organization is ready for AI agents?

Readiness factors include: clear definition of target use cases where agents can add value, availability of quality training data and integration points, organizational willingness to evolve processes, adequate governance and oversight capabilities, technical infrastructure for APIs and data access, and stakeholder buy-in for the transition. Starting with low-risk applications while building organizational expertise and governance frameworks is the recommended approach.

8. What’s the difference between agentic AI and AI agents?

AI agents are software systems with autonomy to make decisions and execute tasks. Agentic AI is a broader concept describing systems with agency—the ability to operate independently across multiple domains, learn continuously, and pursue goals with minimal supervision. Agentic AI represents the evolution toward more sophisticated, truly autonomous systems, while AI agents are the primary implementation of this paradigm.

9. How much does it cost to implement AI agents?

Costs vary widely based on complexity and scale. Basic implementations might cost $50,000-$200,000 for development, while enterprise-grade multi-agent systems can exceed $1 million. Ongoing costs include LLM API calls (typically $100-$10,000+ monthly depending on usage), vector database storage, cloud infrastructure, and development/maintenance staff. ROI often justifies costs through labor savings, efficiency gains, and improved decision-making.

10. What skills do I need to build AI agents?

Essential skills include: Python programming (most frameworks use Python), understanding of large language models and prompt engineering, familiarity with frameworks like LangChain or CrewAI, knowledge of APIs and system integration, database and data structure experience, and understanding of machine learning fundamentals. Many tools are becoming more accessible to developers without deep AI expertise, though understanding LLM behavior and limitations remains important.